Using Language Models to (probably) Read Faster

Idea

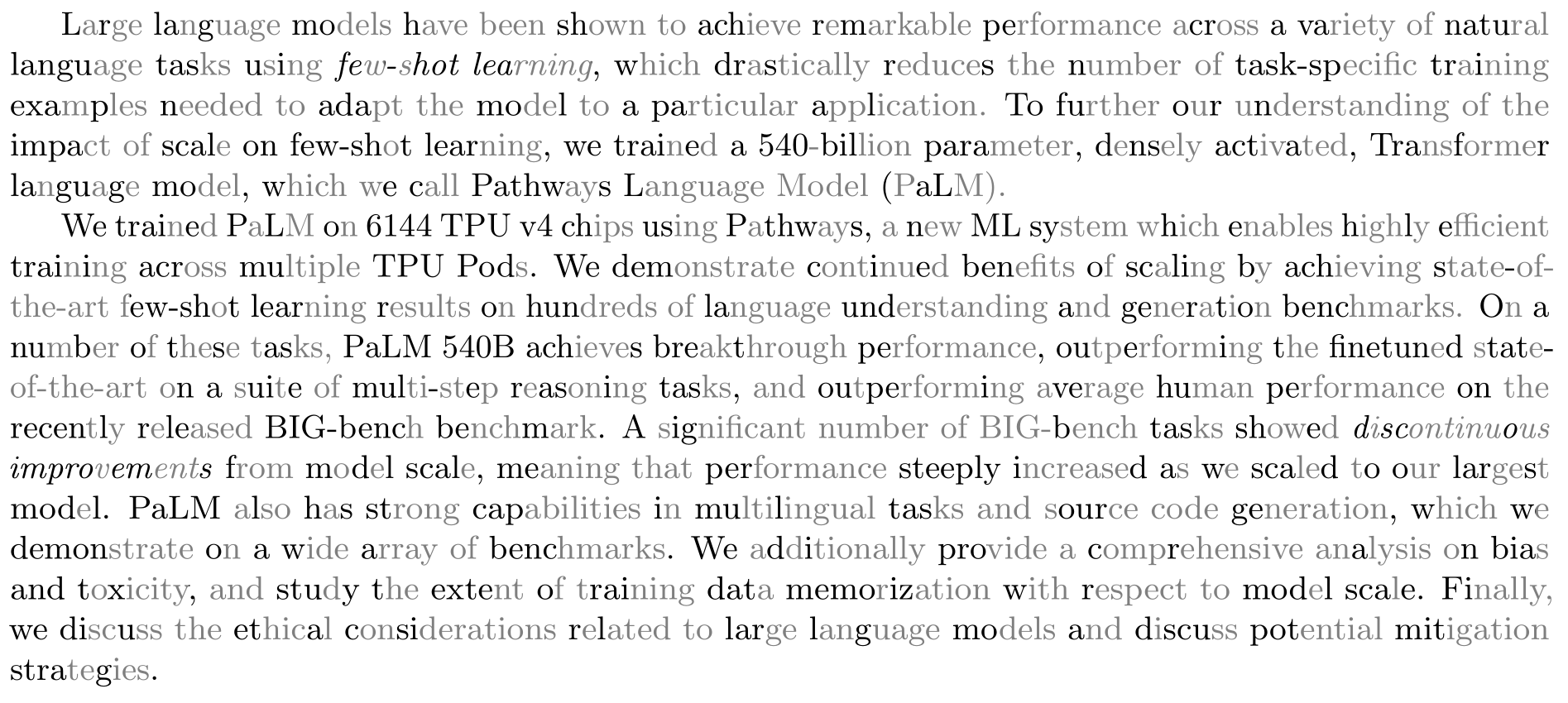

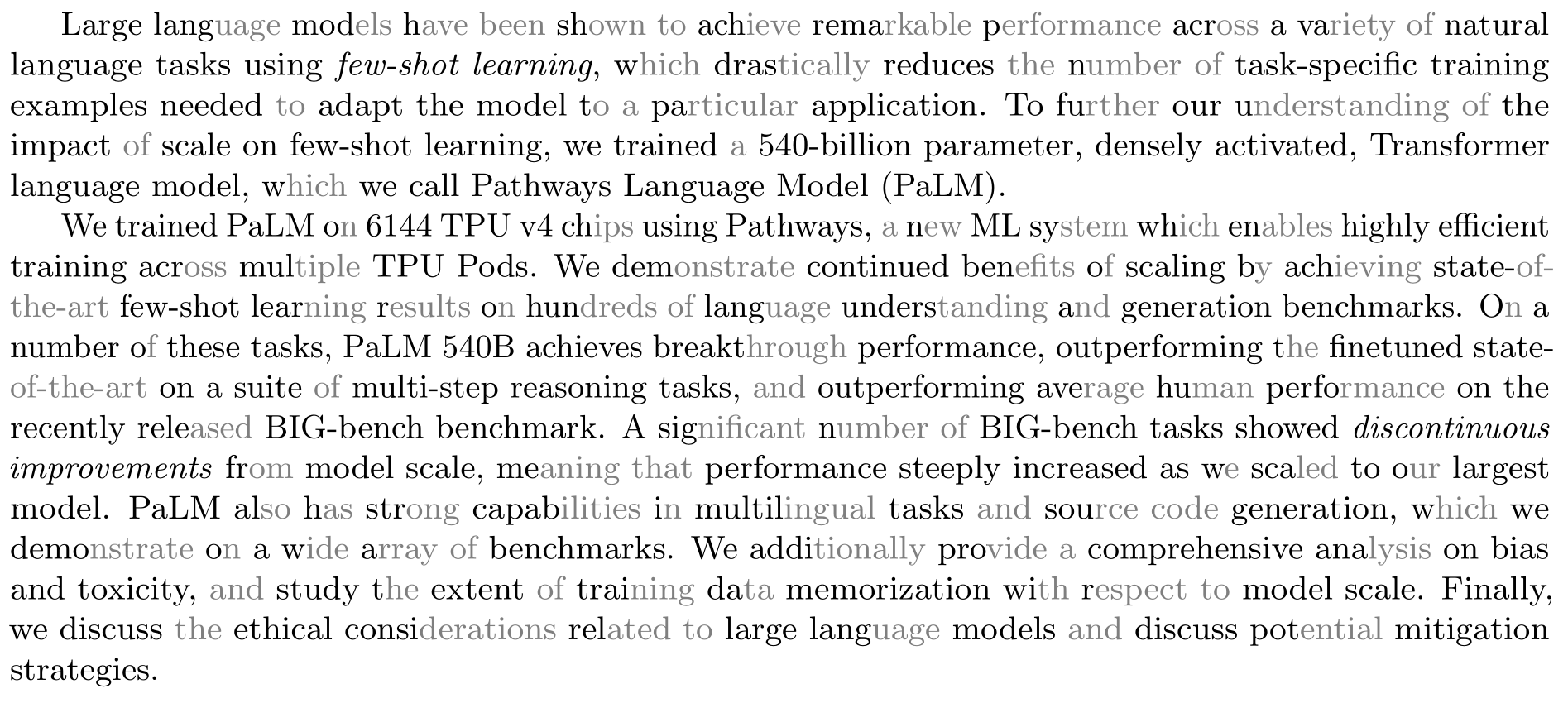

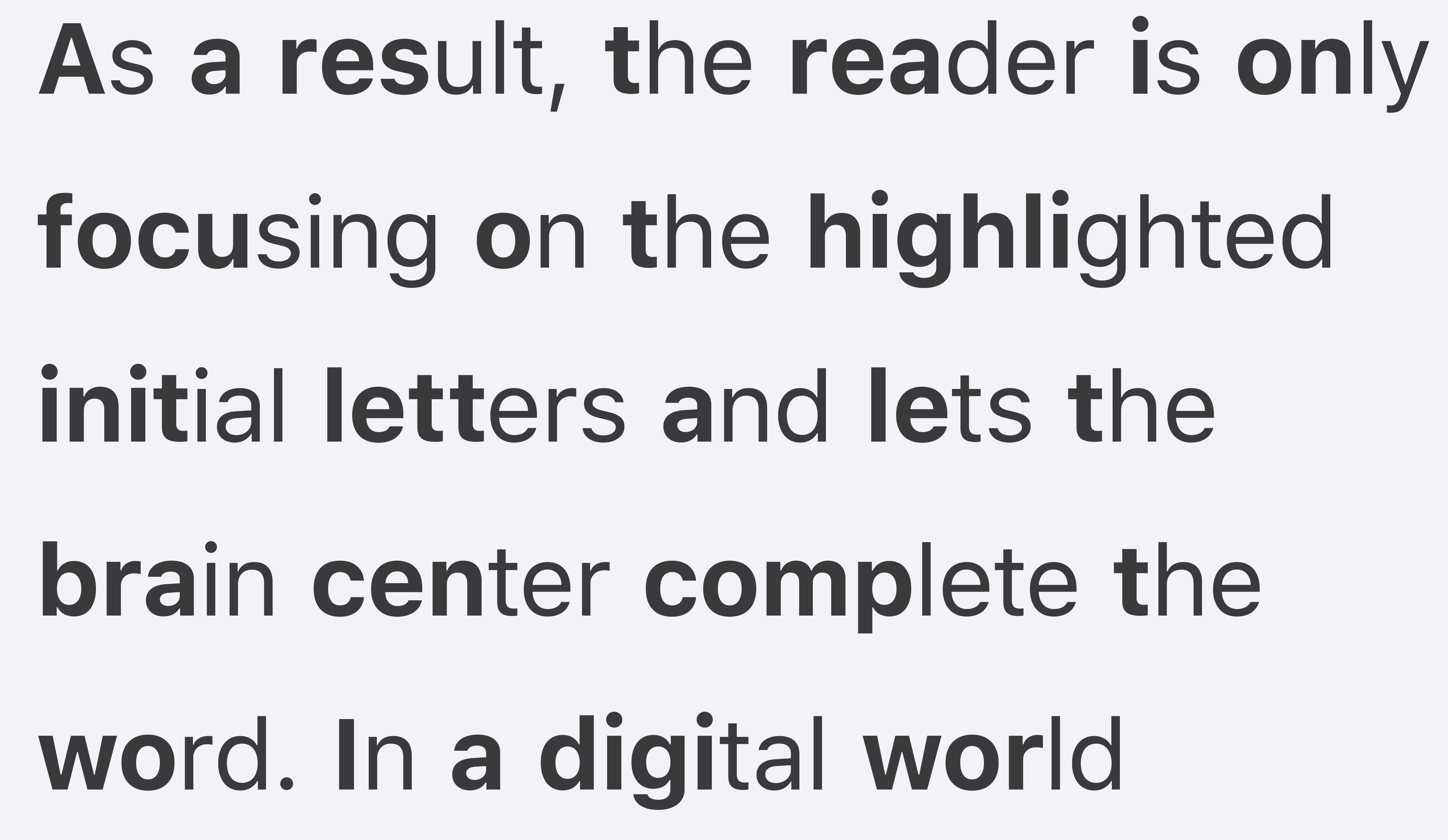

A couple of weeks ago I saw this hackernews article about a method of text rendering to increase text readability. The algorithm is pretty simple: highlight the first few characters of each word (how many characters depends on the size of the word). Here is a screenshot of what it looks like from its website:

That got me thinking: what if instead of using a heuristic method to determine how many characters to highlight, we used a language model? Specifically we highlight the character only when the language model fails to predict the character given its preceding context. Presumably if a language model is smart enough to predict the character, so are we!

Implementation

First of all, we need a character-based language model. I used a

single-character version of reformer fine

tuned for enwiki8 dataset which is available on

huggingface (as I will mention in the notes section, this is a huge overkill but whatever, this is just an experiment ;) ). Let’s test it:

import torch

from transformers import ReformerModelWithLMHead

model = ReformerModelWithLMHead.from_pretrained("google/reformer-enwik8")

# removed for brevity, you can find them on the hugginface repo homepage

def encode(list_of_strings, pad_token_id=0): ...

def decode(outputs_ids): ...

def generate_next_char(text, n_chars=1):

return decode(model.generate(encode([text])[0],

max_length=len(text)+n_chars))

>>> generate_next_char("This is a ")

"This is a s"

>>> generate_next_char("This is a p")

"This is a pr"

>>> generate_next_char("This is a pr")

"This is a pro"

>>> generate_next_char("This is a pre")

"This is a prec"

>>> generate_next_char("This is a pred")

"This is a prede"

>>> generate_next_char("This is a predi")

"This is a predic"

>>> generate_next_char("This is a predic")

"This is a predict"

>>> generate_next_char("This is a predict")

"This is a predicti"

>>> generate_next_char("This is a predicti")

"This is a predictio"

>>> generate_next_char("This is a predictio")

"This is a prediction"

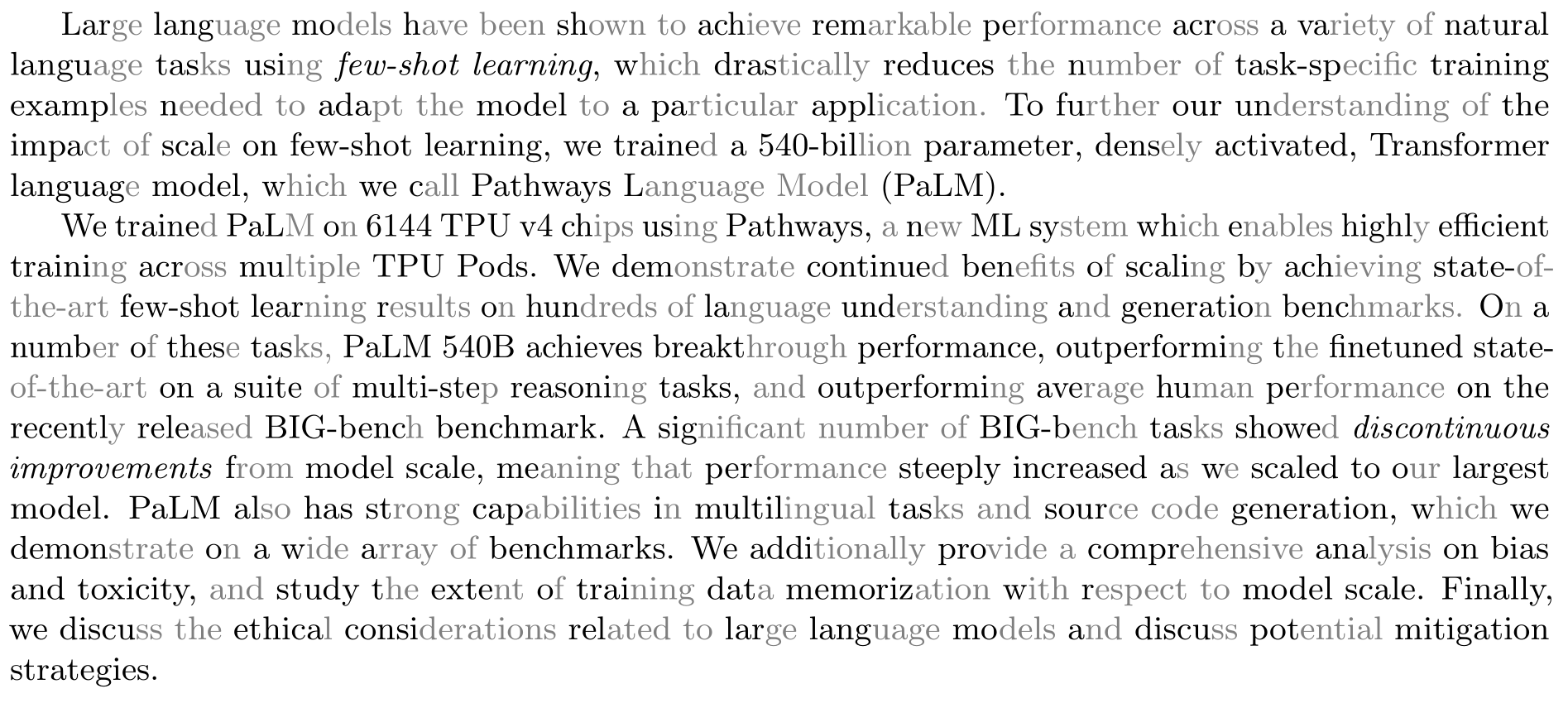

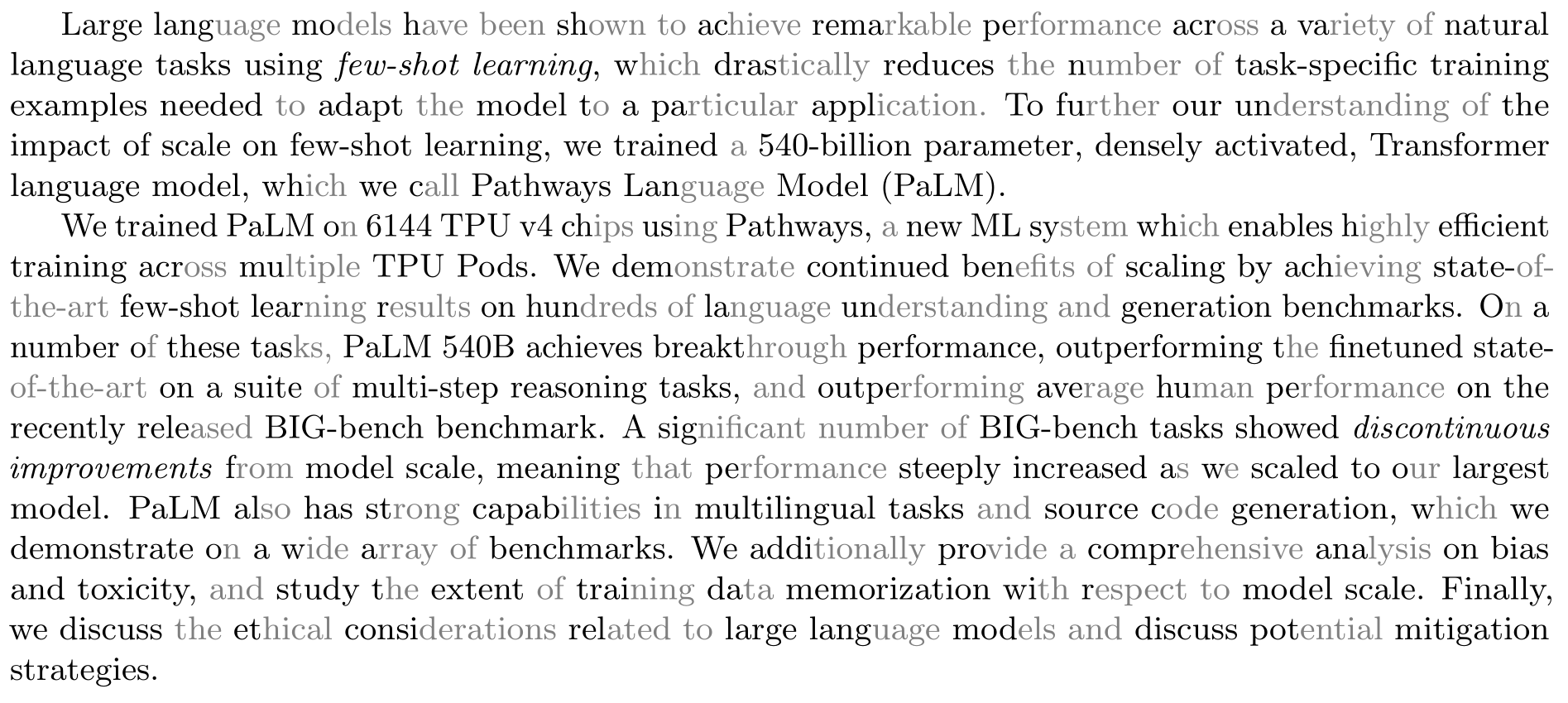

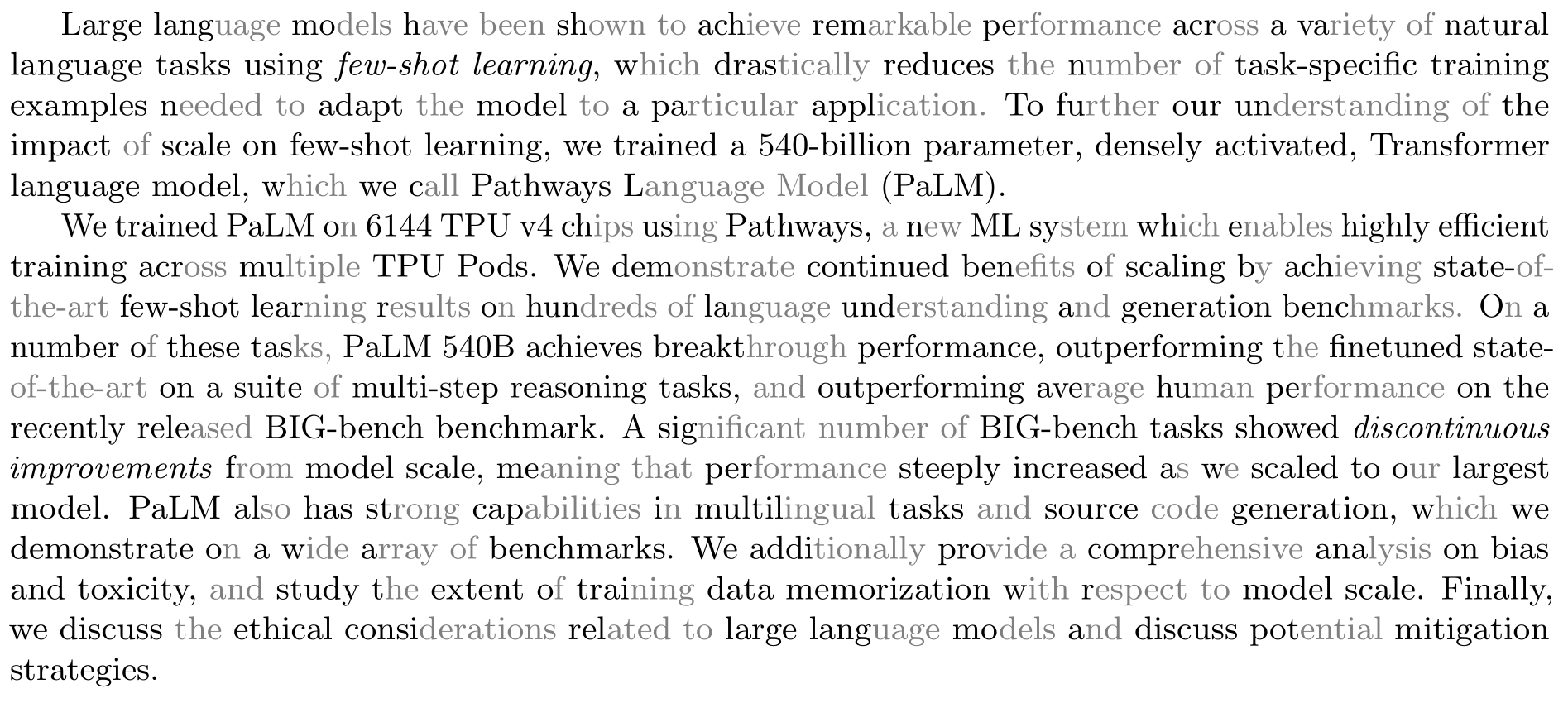

If we wanted to highlight the word “prediction” using the language model, it would look something like this: prediction, only the characters which language model got wrong are highlighted. I implemented this in sioyek PDF reader and the results look like this (if it looks blurry open the image in a new tab and zoom in):

I find sudden highlights in the middle of a word a little off-putting, let’s change it so that a word is highlighted from the begining until the last mispredicted character. Using this scheme, prediction would become prediction (I call this process refinement). It looks like this in sioyek:

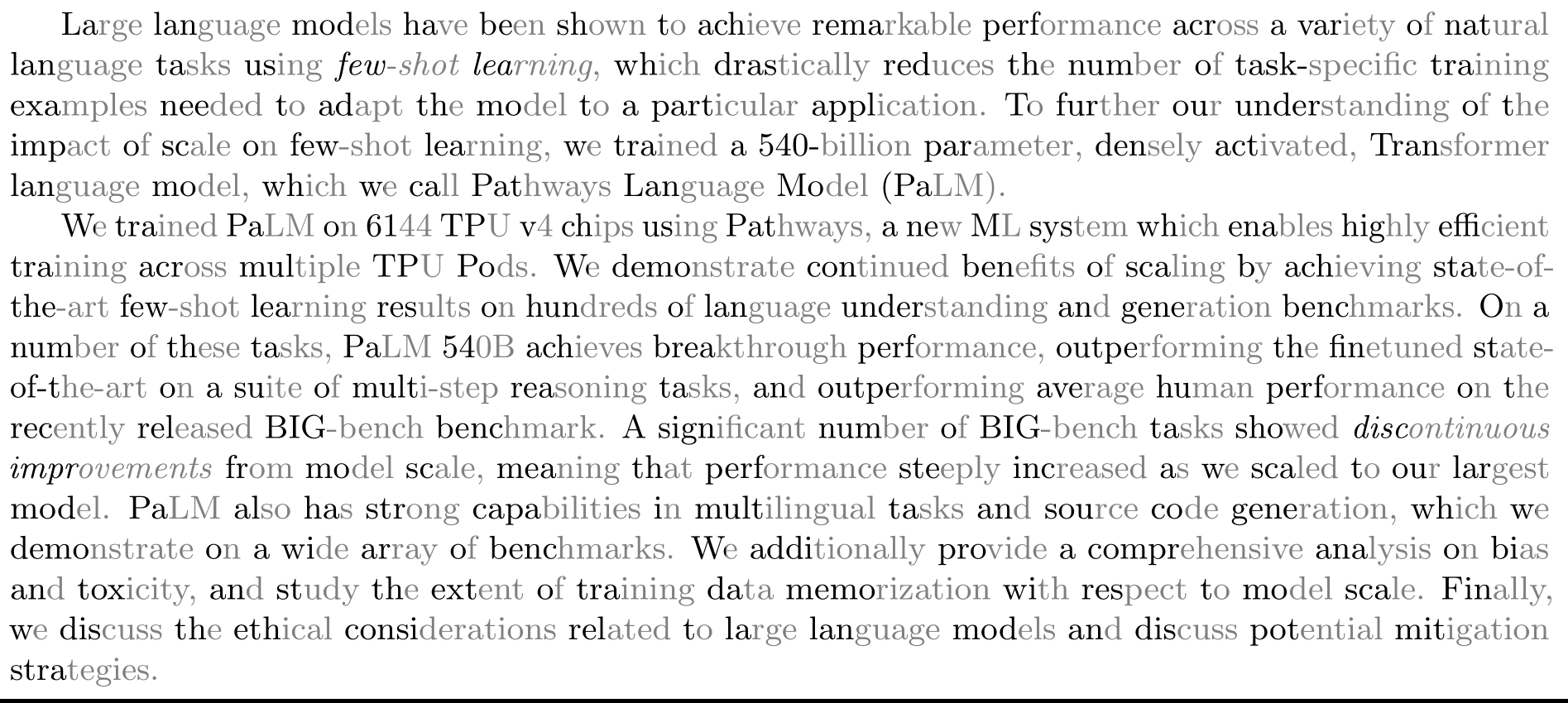

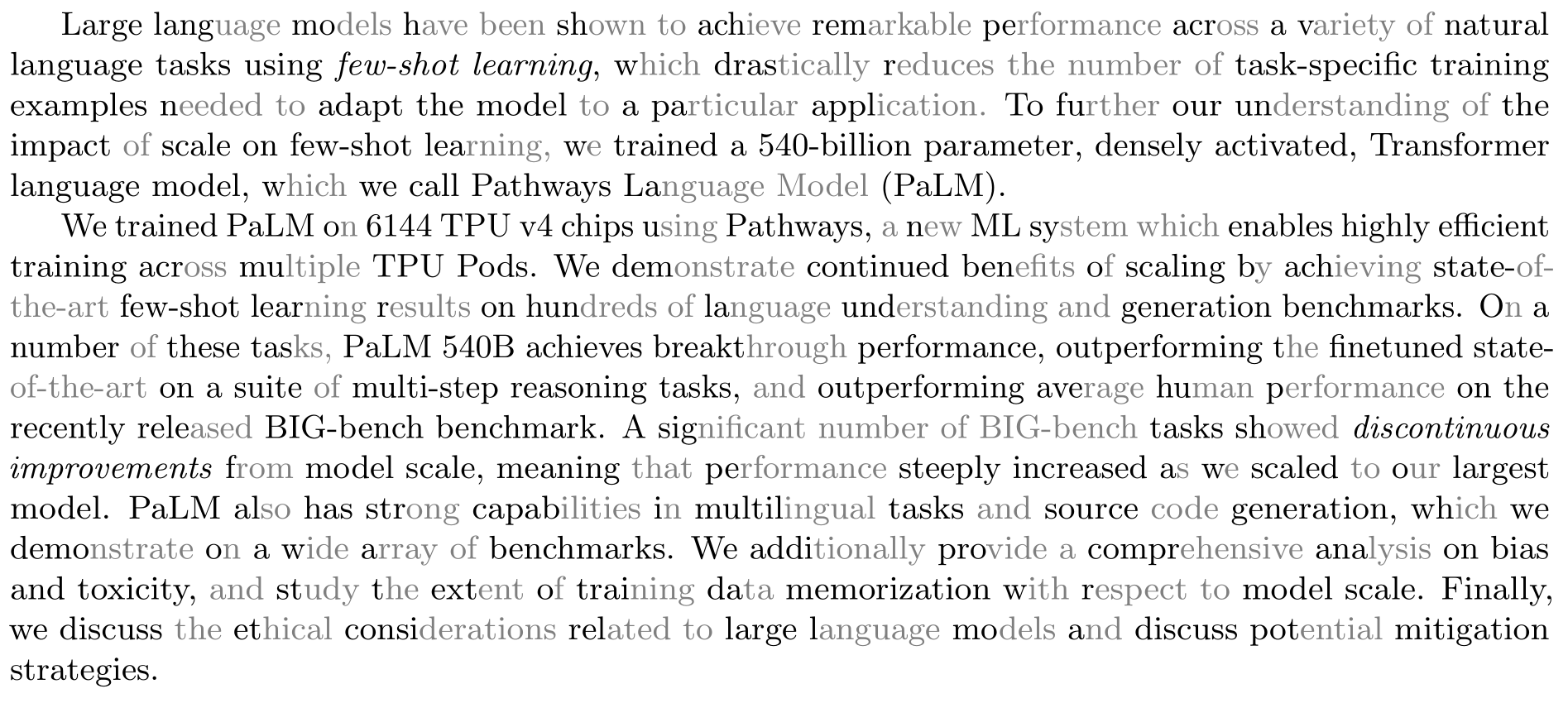

It looks much better, but still words like continued annoy me. If I have already read most of the word, there is little benefit in hiding the rest. So I changed it such that if more than 50% of a word is highlighted, we highlight the entire word (I call this process filling). It looks like this:

Here is a comparison of different highlight modes and the original (bionic) heuristic:

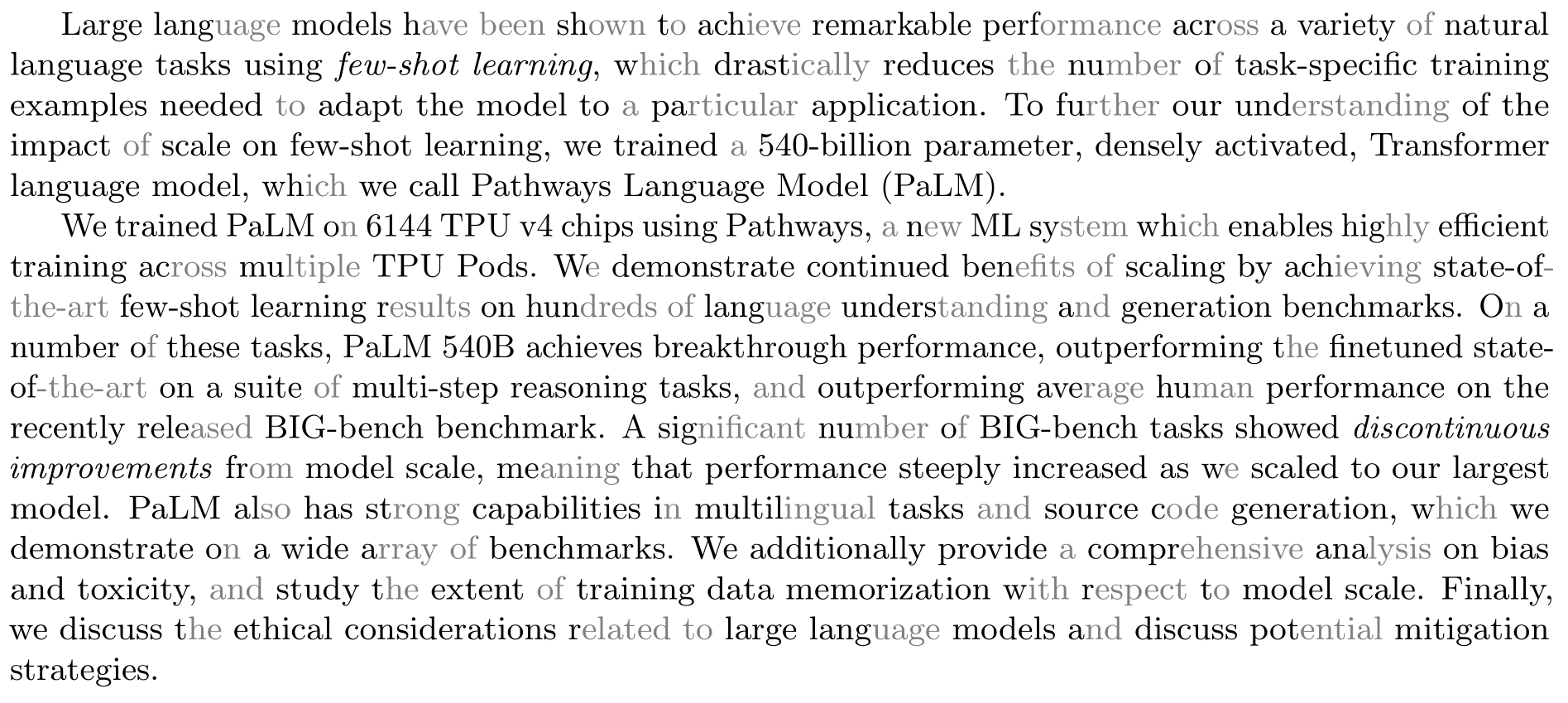

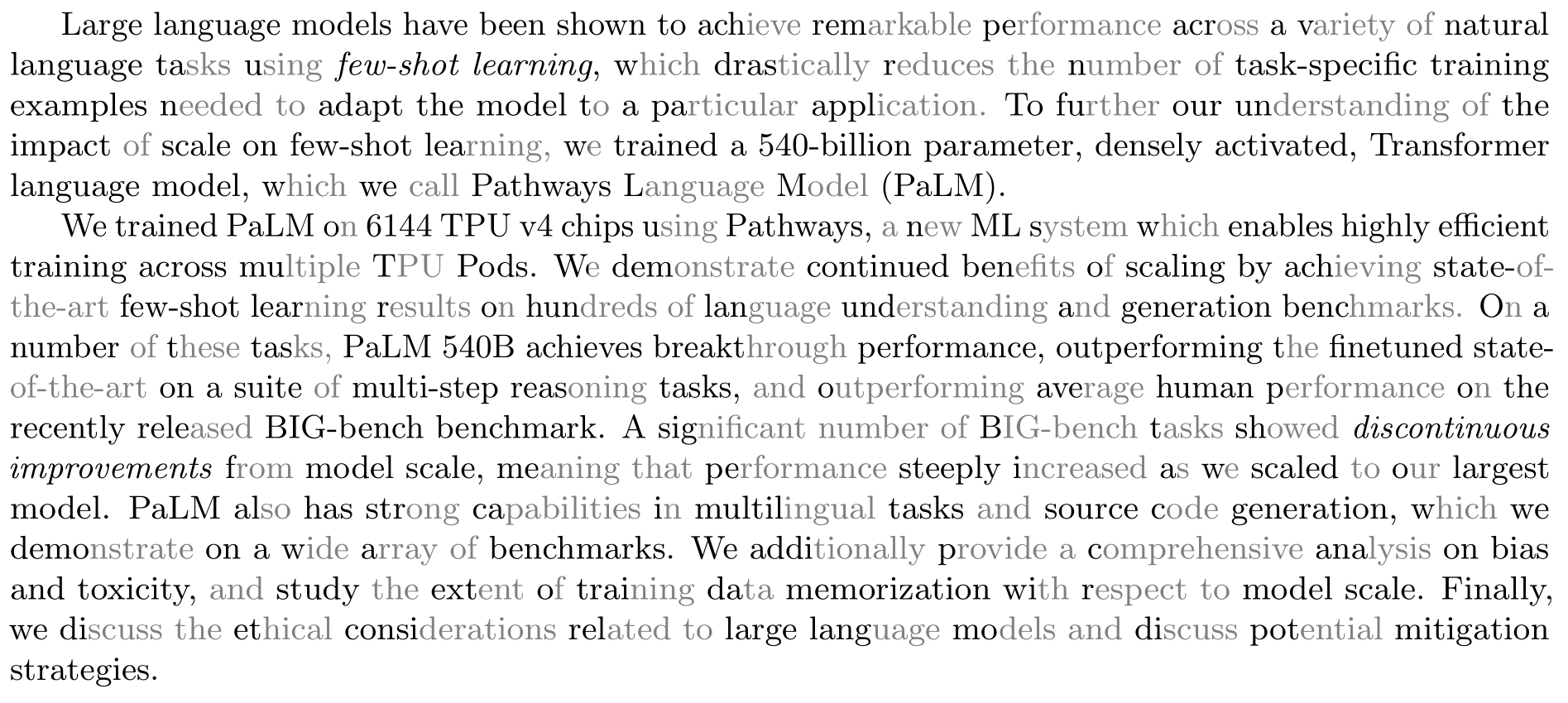

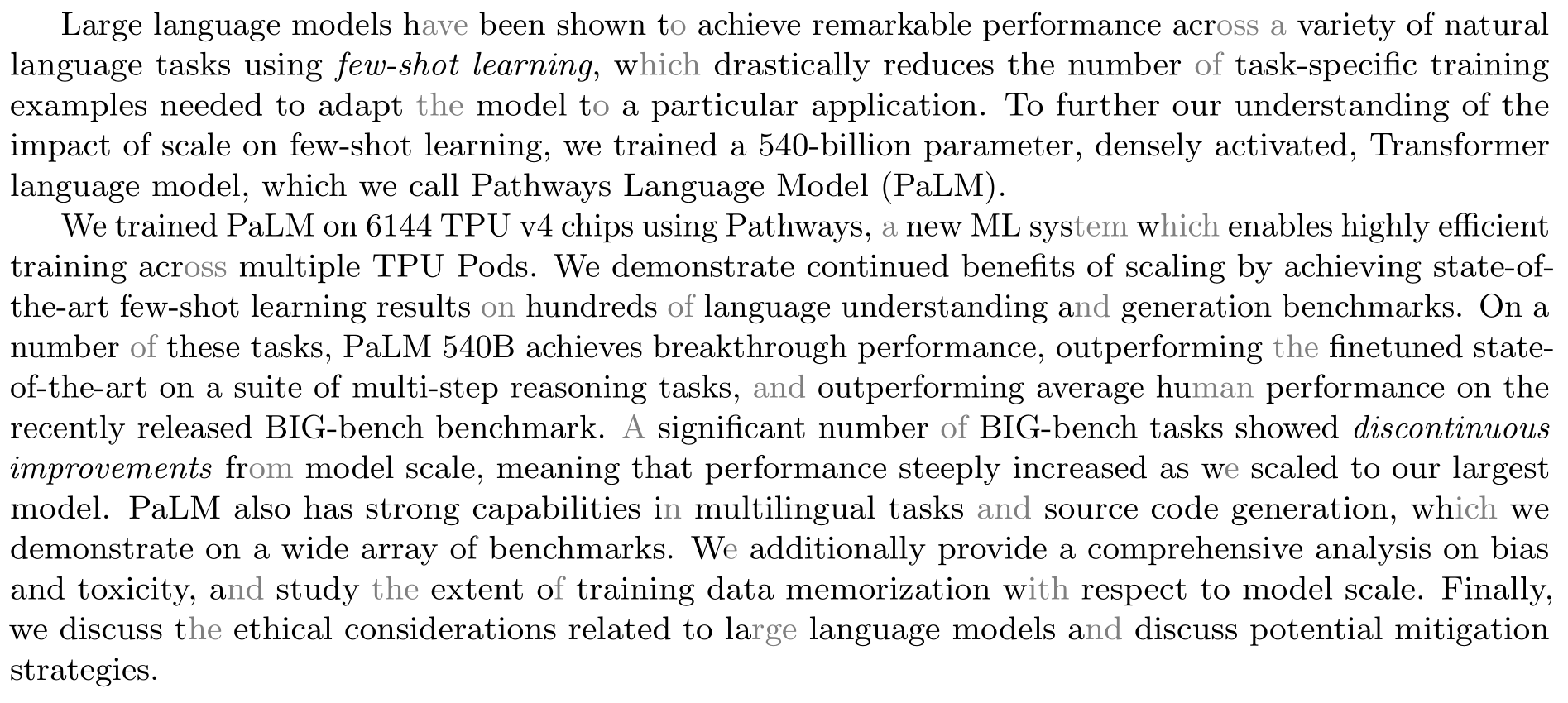

For performance reasons, instead of feeding the entire page from the begining to the point where I want to predict, I only feed the last n characters before the prediction points. Here is a comparison of the results for different values of n:

It seems that we reach dimininshing returns at about 30 characters.

Enabling in Sioyek

If you want to try these out on a PDF file, you can download the latest experimental version of sioyek. Here are the relevant configurations:

text_summary_url: The url of the server which provides the summary. I did not include the server in sioyek itself because I did’nt want to bundle the entire pytorch with sioyek for an experimental feature. Instead I created a python script which runs a local server providing this feature. You can find the script here. The default value ishttp://localhost:5000/which is the default port of the script, so if you don’t change the script you don’t have to set this value.text_summary_should_refine: 1 if you want refinement and 0 otherwisetext_summary_should_fill: 1 if you want filling and 0 otherwisetext_summary_context_size: number of characters in context for next character prediction

For example here is the relevant parts in my prefs_user.config:

text_summary_should_refine 1

text_summary_should_fill 1

text_summary_context_size 40

Of course we have default values for all of these configs so you don’t have to change anything if you are comfortable with the default settings.

Now, in order to use this feature, run the summary_highlight_server.py script and then enable highlights in sioyek by executing toggle_fastread command (press : and type toggle_fastread, it may take a few seconds to compute highlights depending on your GPU).

Notes and Improvements

- I don’t have any data on whether this actually improves reading speed or not. But in my own subjective experience, I think it does.

- Currently this is too GPU-intensive to be deployed. Of course using a full-fledged language model for this task is overkill. Also, as mentioned in huggingface repo page, this model is not optimized for language generation. Probably the best option would be a relatively small RNN language model, however, I could not find any decent pre-trained character-based RNN language models and I don’t have the resources to train it myself. Even simpler non-neural network models are probably good enough.

- One limitation of this approach is that we don’t consider the future context to determine whether to remove a word. For example consider the snippet “task-specific training examples” (in our examples all three words were highlighted). But maybe if we knew that we were going to include both “task-specific” and “examples” then a language model could predict that the middle word in “task-specific [MASK] examples” is “training” with high probability and we could unhighlight the word “training”. However, this is probably too computationally intensive to be worth it.

- Is it possible to use language models that use non-character tokens for this task? That would help a lot since most pre-trained language models are not character-based.